TensorRT-LLM provides 8x higher performance for AI inferencing on NVIDIA hardware.

As companies like d-Matrix squeeze into the lucrative artificial intelligence market with coveted inferencing infrastructure, AI leader NVIDIA today announced TensorRT-LLM software, a library of LLM inference tech designed to speed up AI inference processing.

Jump to:

What is TensorRT-LLM?

TensorRT-LLM is an open-source library that runs on NVIDIA Tensor Core GPUs. It is designed to give developers a space to experiment with building new large language models, the bedrock of generative AI like ChatGPT.

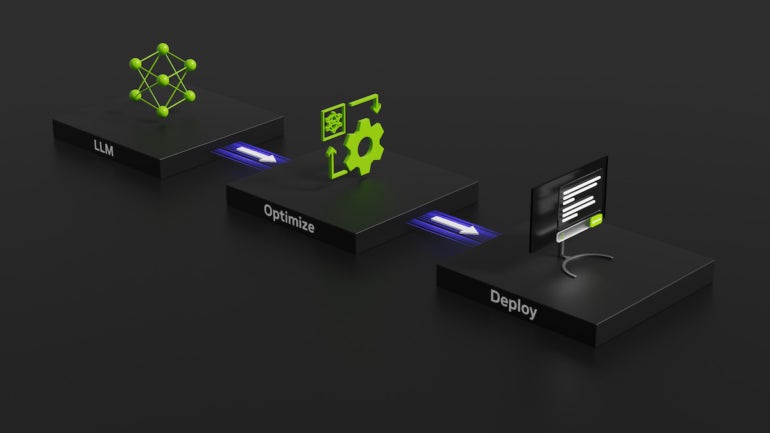

In particular, TensorRT-LLM covers inference — a refinement of an AI’s training or the way the system learns how to connect concepts and make predictions — and defining, optimizing and executing LLMs. TensorRT-LLM aims to speed up how fast inference can be performed on NVIDIA GPUS, NVIDIA said.

TensorRT-LLM will be used to build versions of today’s heavyweight LLMs like Meta Llama 2, OpenAI GPT-2 and GPT-3, Falcon, Mosaic MPT, BLOOM and others.

To do this, TensorRT-LLM includes the TensorRT deep learning compiler, optimized kernels, pre- and post-processing, multi-GPU and multi-node communication and an open-source Python application programming interface.

NVIDIA notes that part of the appeal is that developers don’t need deep knowledge of C++ or NVIDIA CUDA to work with TensorRT-LLM.

SEE: Microsoft offers free coursework for people who want to learn how to apply generative AI to their business. (TechRepublic)

“TensorRT-LLM is easy to use; feature-packed with streaming of tokens, in-flight batching, paged-attention, quantization and more; and is efficient,” Naveen Rao, vice president of engineering at Databricks, told NVIDIA in the press release. “It delivers state-of-the-art performance for LLM serving using NVIDIA GPUs and allows us to pass on the cost savings to our customers.”

Databricks was among the companies given an early look at TensorRT-LLM.

Early access to TensorRT-LLM is available now for people who have signed up for the NVIDIA Developer Program. NVIDIA says it will be available for wider release “in the coming weeks,” according to the initial press release.

How TensorRT-LLM improves performance on NVIDIA GPUs

LLMs performing article summarization do so faster on TensorRT-LLM and a NVIDIA H100 GPU compared to the same task on a previous-generation NVIDIA A100 chip without the LLM library, NVIDIA said. With just the H100, the performance of GPT-J 6B LLM inferencing saw a 4 times jump in improvement. The TensorRT-LLM software brought an 8 times improvement.

In particular, the inference can be done quickly because TensorRT-LLM uses a technique that splits different weight matrices across devices. (Weighting teaches an AI model which digital neurons should be associated with each other.) Known as tensor parallelism, the technique means inference can be performed in parallel across multiple GPUs and across multiple servers at the same time.

In-flight batching improves the efficiency of the inference, NVIDIA said. Put simply, completed batches of generated text can be produced one at a time instead of all at once. In-flight batching and other optimizations are designed to improve GPU usage and cut down on the total cost of ownership.

NVIDIA’s plan to reduce total cost of AI ownership

LLM use is expensive. In fact, LLMs change the way data centers and AI training fit into a company’s balance sheet, NVIDIA suggested. The idea behind TensorRT-LLM is that companies will be able to build complex generative AI without the total cost of ownership skyrocketing.

Source of Article