The first statistically significant results are in: not only can Large Language Model (LLM) AIs generate new expert-level scientific research ideas, but their ideas are more original and exciting than the best of ours – as judged by human experts.

Recent breakthroughs in large language models (LLMs) have excited researchers about the potential to revolutionize scientific discovery, with models like ChatGPT and Anthropic’s Claude showing an ability to autonomously generate and validate new research ideas.

This, of course, was one of the many things most people assumed AIs could never take over from humans; the ability to generate new knowledge and make new scientific discoveries, as opposed to stitching together existing knowledge from their training data.

But as with artistic expression, music composition, coding, understanding subtext and body language, and any number of other emergent abilities, today’s multimodal AIs do appear to be able to generate novel research – more novel on average than their human counterparts.

No previous research had been done in this field until recently, when over 100 natural language processing (NLP) research experts (PhDs and post-doctorates from 36 different, well-regarded institutions) went head-to-head with LLM-generated ‘ideation agents’ to see whose research ideas were more original, exciting and feasible – as judged by human experts.

In our new paper: https://t.co/xjhjUC1j8J

We recruited 49 expert NLP researchers to write novel ideas on 7 NLP topics.

We built an LLM agent to generate research ideas on the same 7 topics.

After that, we recruited 79 experts to blindly review all the human and LLM ideas.

— CLS (@ChengleiSi) September 9, 2024

The field of NLP is a branch of artificial intelligence that deals with communication between humans and AIs, in language that both sides can ‘understand,’ in terms of basic syntax, but also nuance – and more recently, in terms of verbal tone and emotional inflection.

49 human experts wrote ideas on 7 NLP topics, while an LLM model trained by the researchers generated ideas on the same 7 topics. The study paid US$300 for each idea plus a bonus of $1,000 to the top five human ideas in an effort to incentivize the humans to produce legitimate, easy-to-follow and execute ideas.

Once complete, an LLM was used to standardize the writing styles of each submitted entry while preserving the original content in order to level the playing field, so to speak, keeping the study as blind as possible.

When we say “experts”, we really do mean some of the best people in the field.

Coming from 36 different institutions, our participants are mostly PhDs and postdocs.

As a proxy metric, our idea writers have a median citation count of 125, and our reviewers have 327.

— CLS (@ChengleiSi) September 9, 2024

All the submissions were then reviewed by 79 recruited human experts and a blind judgment of all research ideas was made. The panel submitted 298 reviews, giving each idea between two to four independent reviews.

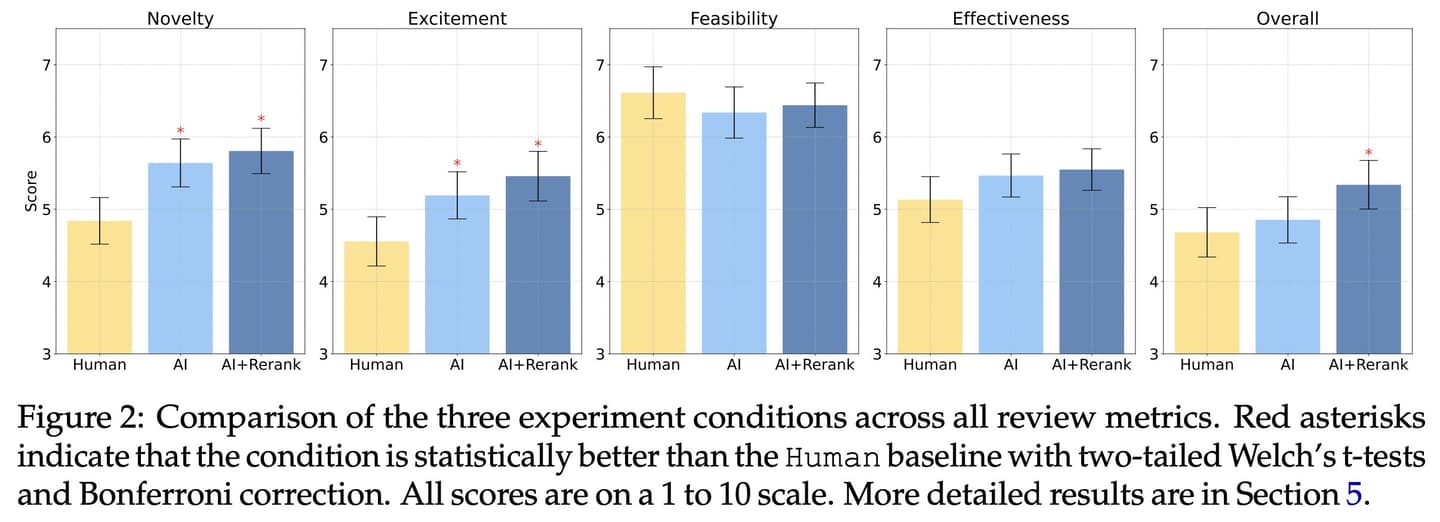

And sure enough, when it comes to novelty and excitement, the AIs tested significantly better than human researchers. They also ranked slightly lower than humans in feasibility, and slightly higher in effectiveness – but neither of these effects were found to be statistically significant.

Chenglei Si

The study also uncovered certain flaws, such as the LLM’s lack of diversity in generating ideas as well as their limitations in self-evaluation. Even with explicit direction not to repeat itself, the LLM would quickly begin to do so. LLMs also weren’t able to review and score ideas with much consistency and scored low in agreement with human judgments.

The study also acknowledges that the human side of judging the “originality” of an idea is rather subjective, even with a panel of experts.

To better prove the theory that LLMs may or may not be better at the potential for autonomous scientific discovery, the researchers will recruit more expert participants. They propose a more comprehensive follow-up study, where the ideas generated by both AI and humans are fully developed into projects, allowing for a more in-depth exploration of their impact in real-world scenarios.

But these initial findings are certainly sobering. Humanity finds itself looking a strange new adversary in the eye. Language model AIs are becoming incredibly capable tools – but they’re still notoriously unreliable and prone to what AI companies call “hallucinations,” and what anyone else might call “BS.”

They can move mountains of paperwork – but there’s certainly no room for “hallucinations” in the rigor of the scientific method. Science can’t build on a foundation of BS. It’s already scandalous enough that by some estimates, at least 10% of research papers are currently being co-written – at the very least – by AIs.

On the other hand, we can’t understate AI’s potential to radically accelerate progress in certain areas – as evidenced by Deepmind’s GNoME system, which knocked off about 800 years’ worth of materials discovery in a matter of months, and spat out recipes for about 380,000 new inorganic crystals that could have revolutionary potential in all sorts of areas.

This is the fastest-developing technology humanity has ever seen; it’s reasonable to expect that many of its flaws will be patched up and painted over within the next few years. Many AI researchers believe we’re approaching general superintelligence – the point at which generalist AIs will overtake expert knowledge in more or less all fields.

It’s certainly a strange feeling watching our greatest invention rapidly master so many of the things we thought made us special – including the very ability to generate novel ideas. Human ingenuity seems to be painting humans into a corner, as old gods of ever-diminishing gaps.

Still, in the immediate future, we can make the best progress as a symbiosis, with the best of organic and artificial intelligence working together, as long as we can keep our goals in alignment.

But if this is a competition, well, it’s AI: 1, humans: 0 for this round.

Source: Chenglei Si via X

Source of Article