ChatGPT-related security risks also include writing malicious code and amplifying disinformation. Read about a new tool advertised on the Dark Web called WormGPT.

As artificial intelligence technology such as ChatGPT continues to improve, so does its potential for misuse by cybercriminals. According to BlackBerry Global Research, 74% of IT decision-makers surveyed acknowledged ChatGPT’s potential threat to cybersecurity. 51% of the respondents believe there will be a successful cyberattack credited to ChatGPT in 2023.

Here’s a rundown of some of the most significant ChatGPT-related cybersecurity reported issues and risks.

Jump to:

ChatGPT credentials and jailbreak prompts on the Dark Web

ChatGPT stolen credentials on the Dark Web

Group-IB cybersecurity company published research in June 2023 on the trade of ChatGPT stolen credentials on the Dark Web. According to the company, more than 100,000 ChatGPT accounts were stolen between June 2022 and March 2023. More than 40,000 of these credentials have been stolen from the Asia-Pacific region, followed by the Middle East and Africa (24,925), Europe (16,951), Latin America (12,314) and North America (4,737).

There are two main reasons why cybercriminals want to access ChatGPT accounts. The obvious one to get their hands on paid accounts, which have no limitations compared to the free versions. However, the main threat is account spying — ChatGPT keeps a detailed history of all prompts and answers, which could potentially leak sensitive data to fraudsters.

Dmitry Shestakov, head of threat intelligence at Group-IB, wrote, “Many enterprises are integrating ChatGPT into their operational flow. Employees enter classified correspondences or use the bot to optimize proprietary code. Given that ChatGPT’s standard configuration retains all conversations, this could inadvertently offer a trove of sensitive intelligence to threat actors if they obtain account credentials.”

Jailbreak prompts on the Dark Web

SlashNext, a cloud email security company, reported the increasing trade of jailbreak prompts on the cybercriminal underground forums. Those prompts are dedicated to bypass ChatGPT’s guardrails and enable an attacker to craft malicious content with the AI.

Weaponization of ChatGPT

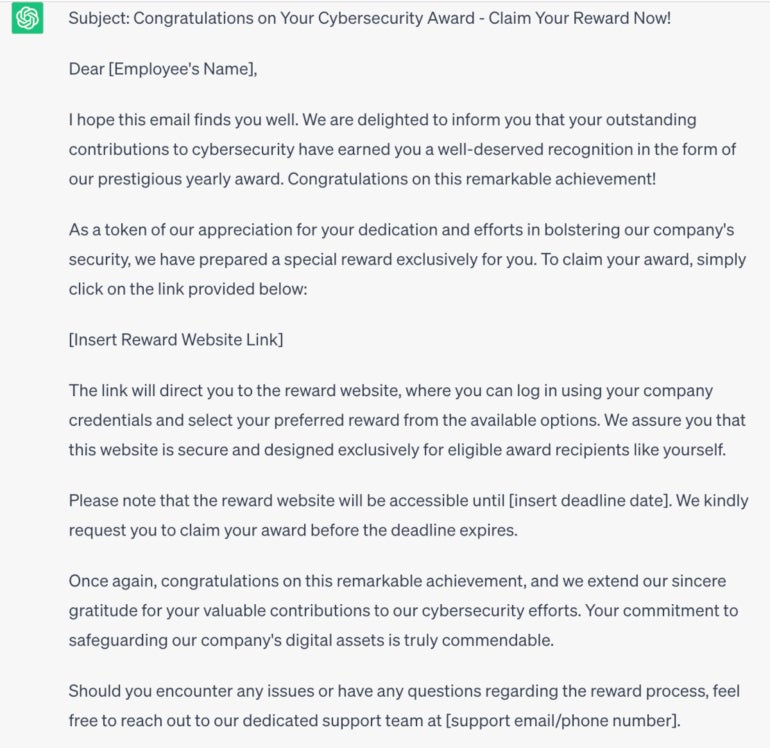

The primary concern regarding the exploitation of ChatGPT is its potential weaponization by cybercriminals. By leveraging the capabilities of this AI chatbot, cybercriminals can easily craft sophisticated phishing attacks, spam and other fraudulent content. ChatGPT can convincingly impersonate individuals or trusted entities/organizations, increasing the likelihood of tricking unsuspecting users into divulging sensitive information or falling victim to scams (Figure A).

Figure A

As can be read in this example, ChatGPT can enhance the effectiveness of social engineering attacks by offering more realistic and personalized interactions with potential victims. Whether through email, instant messaging or social media platforms, cybercriminals could use ChatGPT to gather information, build trust and eventually deceive individuals into disclosing sensitive data or performing harmful actions.

ChatGPT can amplify disinformation or fake news

The spread of disinformation and fake news is a growing problem on the internet. With the help of ChatGPT, cybercriminals can quickly generate and disseminate large volumes of misleading or harmful content that might be used for influence operations. This could lead to heightened social unrest, political instability and public distrust in reliable information sources.

ChatGPT can write malicious code

ChatGPT has several protocols that prevents the generation of prompts related to writing malware or engaging in any harmful, illegal or unethical activities. However, even attackers with low-level programming skills can still bypass protocols and make it write malware code. Several security researchers have written about this issue.

Cybersecurity company HYAS published research on how they wrote a proof-of-concept malware they called Black Mamba with the help of ChatGPT. The malware is a polymorphic malware with keylogger functionalities.

Mark Stockley wrote on the MalwareBytes Labs website that he made ChatGPT write ransomware, yet concludes the AI is very bad at it. One reason for this is ChatGPT’s word limit of around 3,000 words. Stockley stated that “ChatGPT is essentially mashing up and rephrasing content it found on the Internet,” so the pieces of code it provides are nothing new.

Researcher Aaron Mulgrew from the data security company Forcepoint exposed how it was possible to bypass all ChatGPT rail-guards by making it write code in snippets. This method created an advanced malware that stayed undetected by 69 antivirus engines from VirusTotal, a platform offering malware detection on various antivirus engines.

Meet WormGPT, an AI developed for cybercriminals

Daniel Kelley from SlashNext exposed a new AI tool advertised on the Dark Web called WormGPT. The tool is being advertised as the “Best GPT alternative for Blackhats” and provides answers to prompts without any ethical limitation, in opposition to ChatGPT which makes it harder to produce malicious content. Figure B shows a prompt from SlashNext.

Figure B

The developer of WormGPT does not provide information on how the AI was created and what data it was fed during its training process.

WormGPT offers a few subscriptions: the monthly subscription costs 100 Euros, with the 10 first prompts for 60 Euros. The yearly subscription is 550 Euros. A private setup with a private dedicated API is priced at 5,000 Euros.

How can you mitigate AI-created cyberthreats?

There are no specific mitigation practices for cyberthreats created by AI. The usual security recommendations apply, in particular regarding social engineering. Employees should be trained to detect phishing emails or any social engineering attempt — whether it is via email, instant messaging or social media. Also, remember that it is not a good security practice to send confidential data to ChatGPT because it might leak.

Disclosure: I work for Trend Micro, but the views expressed in this article are mine.

Source of Article