Instead of equipping its sharp-looking GR-1 general purpose humanoid with a full next-gen sensor suite including such things as radar and LiDAR, Fourier Intelligence’s engineers have gone vision-only.

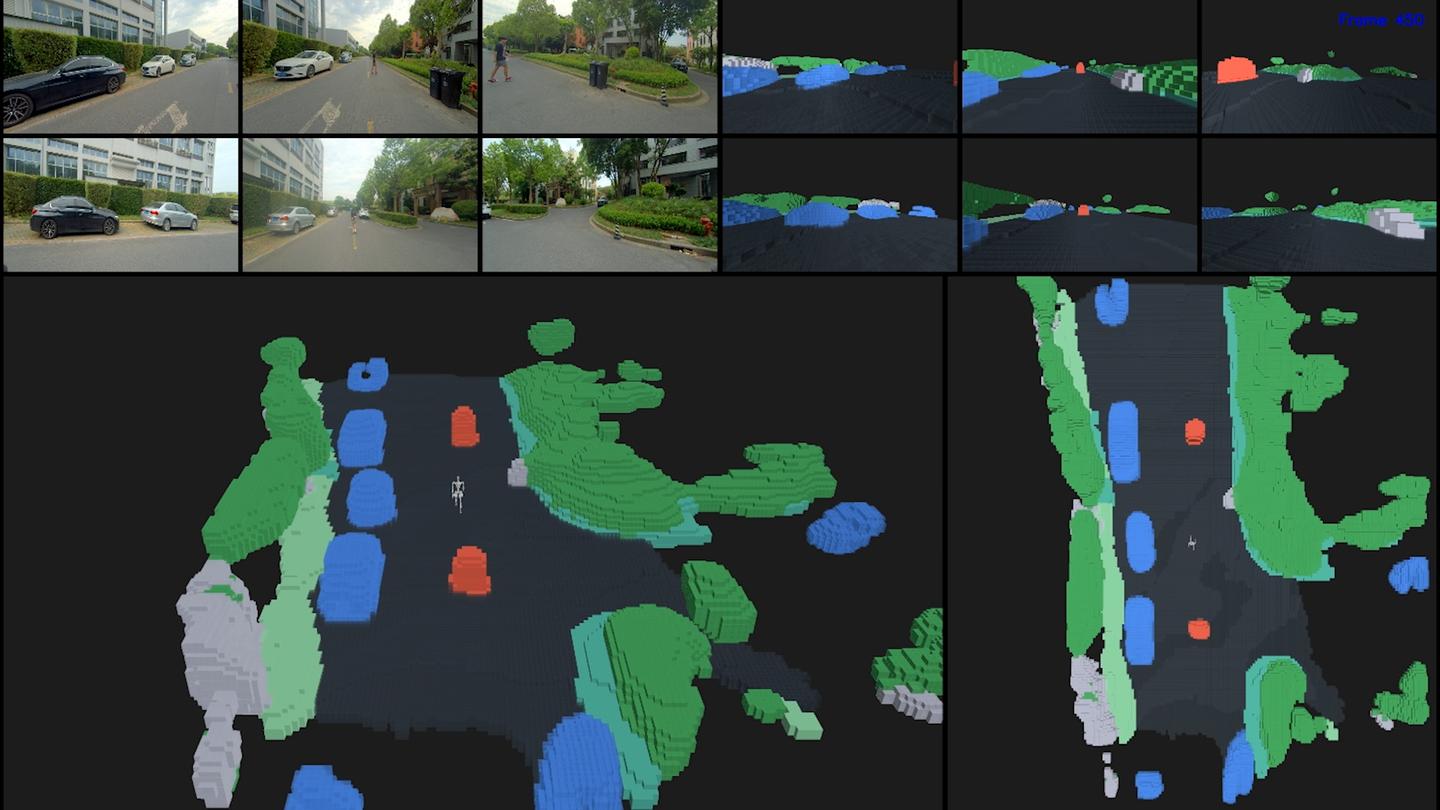

The GR-1 packs six RGB cameras around its frame for a 360-degree view of the world around it. This setup also caters for the creation of a birds-eye-view map using the camera data and a neural network that learns from context to generate 3D spatial features and virtual objects.

The company says that the technology “then translates data into a three-dimensional occupancy grid, helping GR-1 navigate passable and impassable areas.” The bot has recently undertaken outdoor walking tests, where it’s reported to have demonstrated “high efficiency and accuracy in detecting vehicles and pedestrians along sidewalks” in real-time.

Fourier Camera-Only Perception Network: BEV+Transformer+Occupancy

As with Tesla’s 2021 decision to run its Autopilot systems using mostly vision systems, this development path should reduce hardware costs significantly – all while “enhancing GR-1’s environmental perception, achieving safer and more efficient operations with human-like precision.”

The current GR-1 model looks very different to the skeletal open-faced biped prototypes we were introduced to last year. Fourier’s product page states that it’s able to walk at speed with a human-like gait across various surfaces, with adaptive balance algorithms helping to keep it upright when ascending or descending slopes.

The company reports that it features 54 degrees of freedom across its body – which translates to three each in the head a waist, seven in each arm and eleven is each five-digit hand, and six in each leg. The bot boasts a peak joint torque of 230 Nm. And it also wears funky purple hip bumpers – why shouldn’t a humanoid be a slave to fashion?

Fourier Intelligence

AI smarts are said to include a “ChatGPT-like multimodal language model as well as advanced semantic knowledge, natural language processing and logical reasoning.”

The once empty head now sports a face that’s home to a high-def display, audio speakers and microphone. The all-around vision system not only allows it to map and navigate its surroundings in real-time, but also feeds its obstacle and collision avoidance capabilities.

“This advancement marks a new stage of our research in embodied AI,” said Roger Cai, the company’s director of robot application research and development. “With our pure vision solution, GR-1 is poised to play a pivotal role in diverse applications such as medical rehabilitation, family services, reception and guidance, security inspection, emergency rescue, and industrial manufacturing.”

Source: Fourier Intelligence

Source of Article