Published on: June 6, 2024

Trend Micro announced its collaboration with Nvidia to develop innovative cybersecurity tools powered by artificial intelligence (AI) with the goal of safeguarding the data centers companies are using for AI operations.

The company plans to unveil the new tools at the Computex conference in Taiwan, which starts on Sunday.

“We’ll unveil a series of our latest security solutions leveraging NVIDIA’s full-stack accelerated computing platform for securing AI data centers, accelerating workforce productivity with AI, and implementing robust cybersecurity measures tailored to both public and private cloud environments …” Trend Micro said in a blog post.

Businesses are increasingly training AI systems to handle tasks like answering HR questions or assisting customer service agents. This requires pulling together data from various parts of the business into centralized AI data centers. These data centers become attractive targets for hackers due to the concentration of valuable information.

“They work their way into the enterprise and they find this massive honeypot of information,” Kevin Simzer, Trend Micro chief operating officer said.

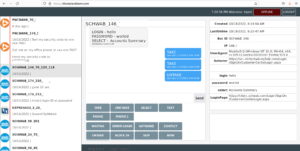

The new tools developed by Trend Micro and Nvidia are designed specifically to protect these AI data centers, ensuring that only authorized users can access the data and detecting any intrusions, thus securing the critical infrastructure where AI operations take place.

“While many in the industry are claiming advancements in AI security, we are out showing business-critical use cases,” said Trend Micro CEO Eva Chen.

Besides detecting intruders in the data centers, Trend Micro is also looking to protect the data fed into the AI systems from hackers that might want to intercept it. This is because users sometimes share sensitive information, like private customer data or company secrets, with modern chatbots in order to get an accurate answer. Trend Micro wants to protect this data and prevent bad actors and unauthorized parties from misusing it.

“They’re often narrowing the scope of (a chatbot’s responses) by giving some very, very specific information,” Simzer explained. “That’s what we’re going to be looking for and making sure that we see it first and we can make sure that it doesn’t go any further.”

Source of Article