If you have containers gobbling up too much of your Kubernetes cluster CPU, Jack Wallen shows you how to limit the upper and lower ranges.

Image: Jack Wallen

When you create your Kubernetes pods and containers, by default they have unlimited access to your cluster resources. This can be both good and bad. It’s good because you know your containers will always have all the resources they need. It’s bad because your containers can consume all of the resources in your cluster.

You might not want that–especially when you have numerous pods that house crucial containers to keep your business humming along.

What do you do?

You limit the CPU ranges in your pods.

This is actually quite the simple task, as you define it in your namespaces. I’m going to walk you through the process of defining CPU limits in the default namespace. From there, you can start working with this option in all of your namespaces.

SEE: Hiring kit: Database administrator (TechRepublic Premium)

What you’ll need

The only thing you’ll need to make this work is a running Kubernetes cluster. I’ll be demonstrating with a single controller and three nodes, but you’ll do all the work on the controller.

How to limit CPU ranges

We’re going to create a new YAML file to limit the ranges for containers in the default namespace. Open a terminal window and issue the command:

nano limit-range.yml

The important options for this YAML file are kind and cpu. For kind we’re going to use LimitRange, which is a policy to constrain resource allocations (for both Pods or Containers) within a namespace. The cpu option defines what we are limiting. You can also limit the amount of memory available, using the memory option, but we’re going to stick with cpu for now.

Our YAML file will look like this:

apiVersion: v1 kind: LimitRange metadata: name: limit-range spec: limits: - max: cpu: "1" min: cpu: "200m" type: Container

With CPU you can limit them in two ways, using milliCPU or just cpu. What we’re doing is limiting the cpu to a maximum of 1 CPU and a minimum of 200 milliCPU (or m). Once you’ve written the file, save and close it.

Before you apply the YAML file, check to see if there’s already a range limit set with the command:

kubectl get limitrange

The above command shouldn’t report anything back.

Now, run the command to create the limitrange like so:

kubectl create -f limit-range.yml

If you re-run the check, you should see it report back the name limit-range and the time/date it was created. You can then check to see that the proper limits were set with the command:

kubectl describe limitrange limit-range

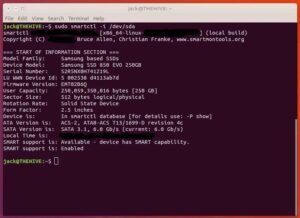

You should see that the minimal is 200m and the max is 1 (Figure A).

Figure A

” data-credit rel=”noopener noreferrer nofollow”>

We’ve successfully set our range limitations for CPU on our Kubernetes cluster.

And that’s all there is to setting limits on how much and how little of your CPU your containers can gobble up from your Kubernetes cluster. Using this feature your containers will always have enough CPU, but aren’t capable of draining the cluster dry.

Also see

Source of Article